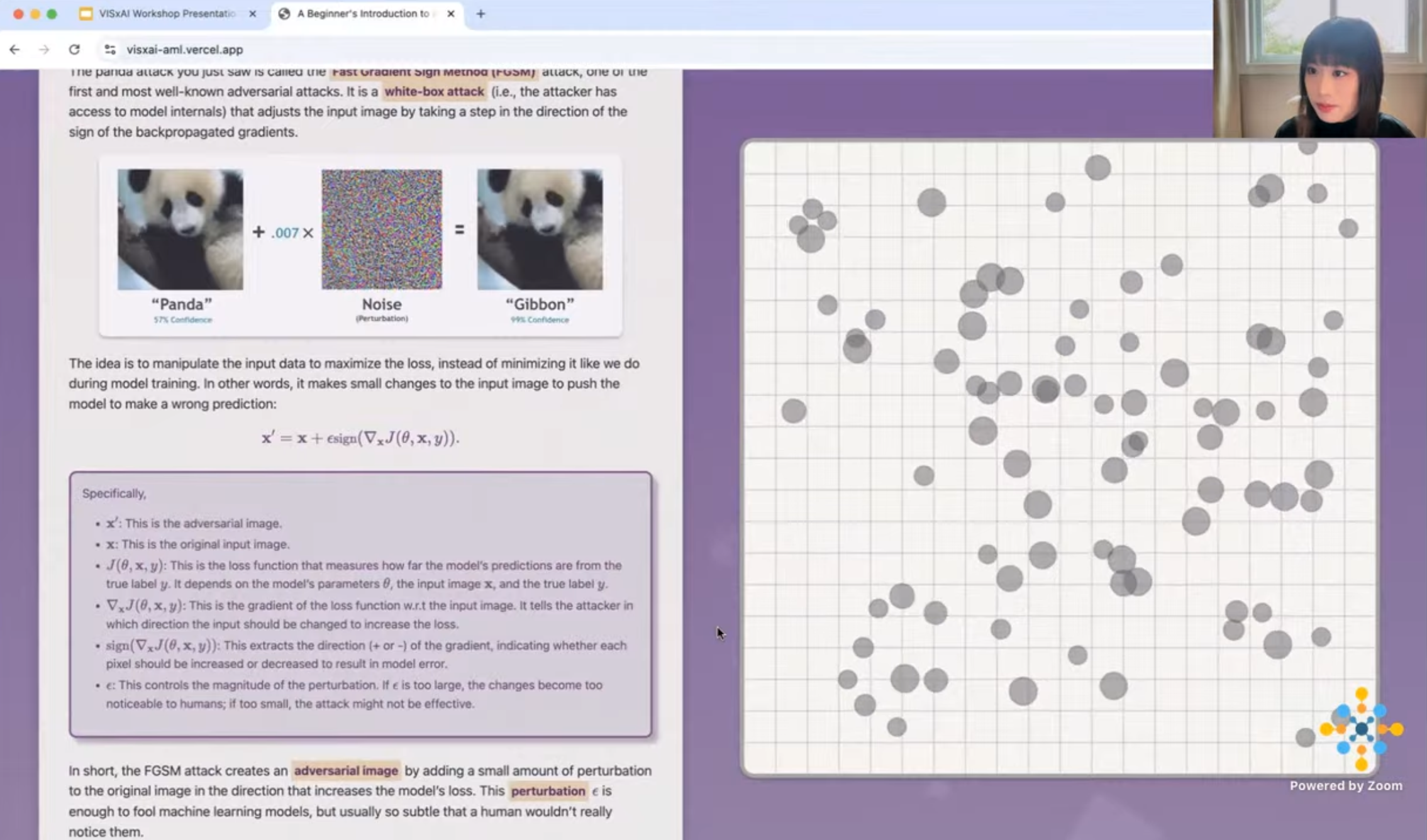

"Panda or Gibbon? A Beginner's Introduction to Adversarial Attacks" is an interactive beginner-friendly visualization guide designed to introduce AI learners to adversarial attacks in machine learning, specifically focusing on the Fast Gradient Sign Method (FGSM) attack. Created primarily with D3.js and Idyll-lang, our interactive explainable uses dynamic visualizations and animations to show how subtle, human-imperceptible perturbations can fool image classification models like ResNet-34 into making incorrect predictions. The guide allows users to explore the impact of these adversarial attacks on model behavior by comparing clean and perturbed data points, as well as two different ResNet-34 models trained with standard and adversarial methods.

- Links:

- Explainable: https://visxai-aml.vercel.app/

- Video Demo: https://youtu.be/ASEd4f5gMvA

- Recognition & Outreach

- Accepted and presented at the 7th VISxAI Workshop at IEEE VIS24: VISxAI Workshop Program Info

- Core Features

- Explains adversarial attacks using beginner-friendly interactive visualizations.

- Explores the FGSM attack's impact on ResNet-34 models, with insights into both natural and adversarial images, as well as standard and adversarial trainings.

- Includes embedding-level and instance-level analysis to show how adversarial perturbations affect models.

- SkillsPython, PyTorch, t-SNE, Adversarial Machine Learning, XAI Visualization, D3.js, Idyll-lang

- AuthorsYuzhe You, Jian Zhao

- KeywordsAdversarial Machine Learning, FGSM Attack, Adversarial Attack, Image Classification, Visualization, ResNet, Model Robustness